The Case for the AI Context Librarian

Decentralization worked in the copilot era, but autonomous agents need a single source of truth

I was at a cottage with my kids a few summers ago when Apple Maps tried to kill us. We were driving to mini‑golf, following directions that grew stranger by the minute until we found ourselves at a mining site. The app was confident we’d arrived. I was sure we hadn’t.

So I ignored it, turned around, switched map apps and found a better route. No drama. No harm.

We have similar experiences with chatbots today. They fail, we catch them, we fix the issue.

But just as self‑driving cars heighten risk from bad maps, AI agents heighten risk from bad context. As we move from copilots to autopilots, from tools that assist to agents that work for you, errors you once caught instantly can propagate across fleets of autonomous executions.

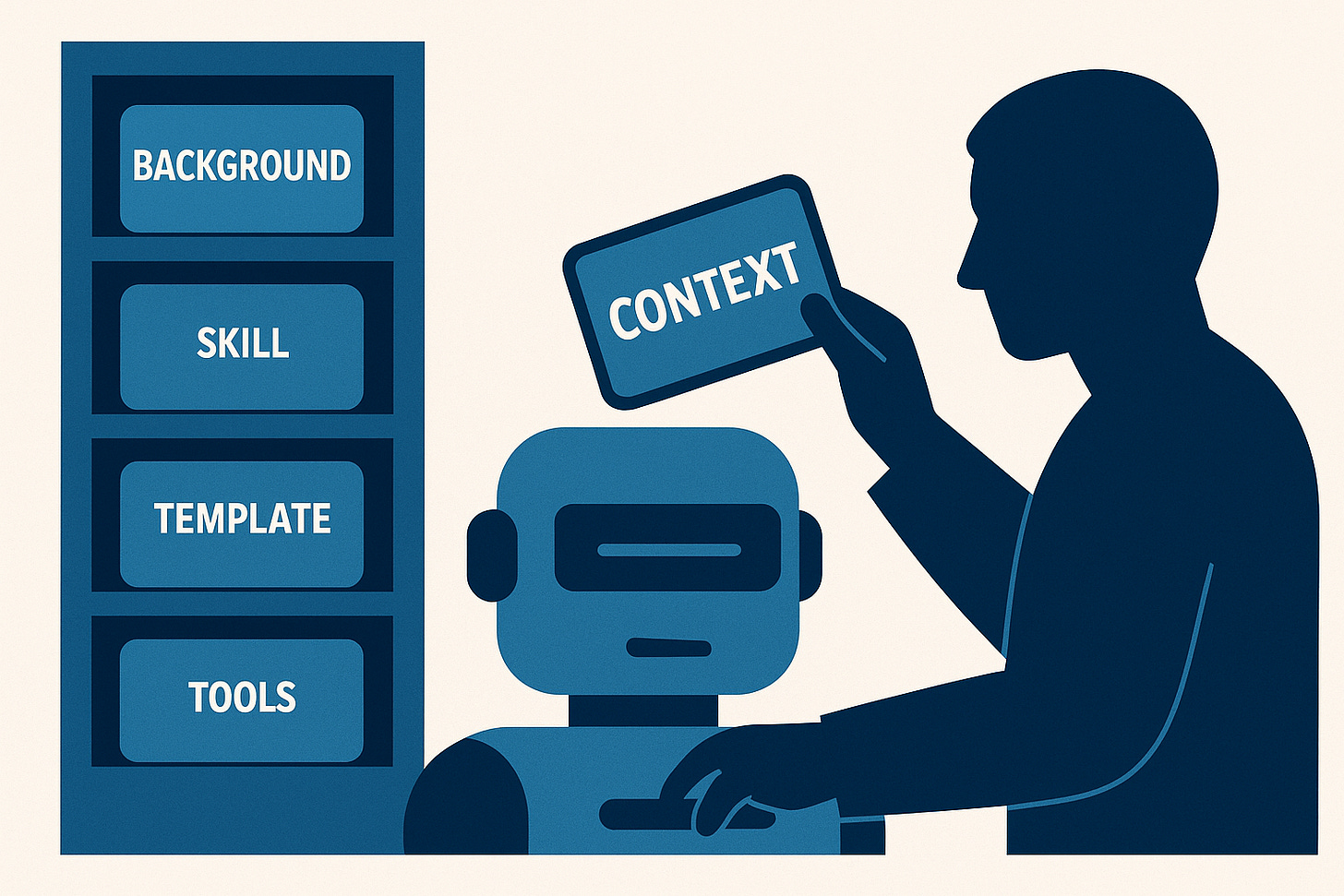

So if organizations want to harness agents’ potential, they must ensure those agents have good maps—accurate, up-to-date, optimized skills, background information, templates, tools, and examples.

Someone has to be accountable for this.

Enter the AI Context Librarian.

Bottom-up works until it doesn’t

As I previously wrote, companies that successfully embrace AI do so bottom-up. They democratize access to AI tools and encourage people to experiment.

I’ve seen this first-hand. At my company, for example, we have way more custom GPTs than employees. People build small, targeted assistants for their roles. A popular one turns timelines into bullet descriptions, another helps with recruiting tasks. These get shared, tweaked, and remixed.

This decentralized creativity unlocks use cases that are hard to find top-down. (Would a CEO know how frequently people turn timelines into bullet lists?) But it can lead to duplication, staleness, and suboptimal performance, with no central authority to evaluate, optimize, and bless the best tools.

It’s less of an issue when humans supervise. They can pick their preferred custom GPT, review and revise the output, and edit instructions to taste.

But it’s a big risk when humans step out of the execution loop.

(Aside: If you haven’t reached the copilot stage yet, skipping straight to autonomous agents could feel like cultural whiplash. So note that my intended audience for this article is people in organizations with widespread copilot adoption that are now deploying agents.)

Don’t execute yourself off a cliff

Where I work, we create marketing materials for life science companies. Imagine if we used an autonomous agent to create dozens of localized social ads, but gave it:

outdated prescribing information,

last‑year’s brand messaging,

incomplete audience details,

instructions for a junior-level writing skill,

obsolete guidance for social media post character limits.

The agent would dutifully execute. Within a day, we’d have hundreds of unusable outputs. Our creative and regulatory teams would catch this, but it would waste time.

It might also convince leadership that “agents aren’t ready,” when the real issue is that they lacked good context.

Context is critical infrastructure

Autonomous agents need far more than a clever prompt. A good analogy is this:

Imagine you’re training a new employee and giving them a task they’ve never done.

To be successful, they need:

a skill definition encompassing best practices to perform like an expert1,

background and a brief covering things like brand, audience, and objectives,

templates and examples that show what good looks like,

tools like libraries and API endpoints, or web apps for browser-based agents.

This bundle—call it a context pack—becomes the agent’s map.

Bad directions, wrong destination.

So engineering and maintaining quality context packs is critical.

Elevating human accountability

This isn’t a task we can today automate. I’ve tried.

Yes, AI can do most of the work. It can gather, research, summarize, and synthesize background information, for example. And it can write and refine skills.

But it can’t be accountable. At least until we have AI-run companies with AI legal liability (not soon), humans can’t step out of the loop entirely. They must step up into a higher loop: governing the systems that execute.

That’s why we need AI Context Librarians. These people:

create and maintain libraries of skills that reflect best practices,

ensure comprehensive and up-to-date background information,

curate templates and examples,

evaluate and provide access to tools,

create and run evaluations to optimize performance,

ensure everything stays curated and widely accessible.

Organizations that understand the need for this role can effectively scale agents. Organizations that don’t will blame technology for what’s really context debt.2

That day at the cottage, Apple Maps was wrong, but I wasn’t. I could see the mining site. I could course‑correct. No harm done.

But in the autopilot era, we’re giving the wheel to machines. If they drive into mining sites, that’s on us.

How I used AI use for this article: First, I used ChatGPT Atlas to summarize a LinkedIn post I had written on this topic, along with comments on that post and my responses. I then dictated more thoughts into ChatGPT and had it prompt me to dig deeper. Once I felt I had enough material, I asked it to sketch a detailed outline based on a narrative nonfiction outline I provided. We jammed on this together until I felt good. Then I had it produce a first draft, which I edited, including extensive rewriting and refining. Finally, I had it generate an image, and we jammed on that until we were both happy. Note that I left in and added some em dashes (—) because, as a former journalist who studied magazine writing, I used these long before we had ChatGPT! I hope they soon are no longer seen as a mark of AI writing.

See, for example, Anthropic’s Skills.

Credit to Pranav Mehta for the term “context debt,” from this comment on LinkedIn.

What if context librarians stop errors propagating across AI fleets? Briliant!