You're Not Extrapolating Enough

Most people still plan for AI as if today’s limits will last. They won’t.

I recently caught up with a friend who’s an experienced consultant. At his company, senior consultants using AI had become so productive they needed far fewer junior employees and interns. He noted the problem this creates: without entry-level roles, how will future senior consultants gain experience? He wants to find a way to hire juniors so they can build the experience seniors now leverage to work with AI.

I share his concern about AI’s impact on early-career employees (recent analysis of payroll data and job posts reinforce it). But I worry about focusing on the wrong solutions. Yes, AI benefits from experienced employees today. But if current trends continue, it simply won’t need them in the future. We risk helping junior employees gain experience that will no longer add value.

I see this pattern often, even in myself. It’s hard for humans to think exponentially. But you can’t plan for a future with AI’s current capabilities. If trends hold, those capabilities will expand rapidly along multiple dimensions. If you find yourself saying “AI can’t do X because of Y limitation,” or “AI will always need a human to do X because it can’t do Y,” you’re probably making this mistake too. Instead, we should anticipate continued rapid progress and plan accordingly.

The present is not like the past

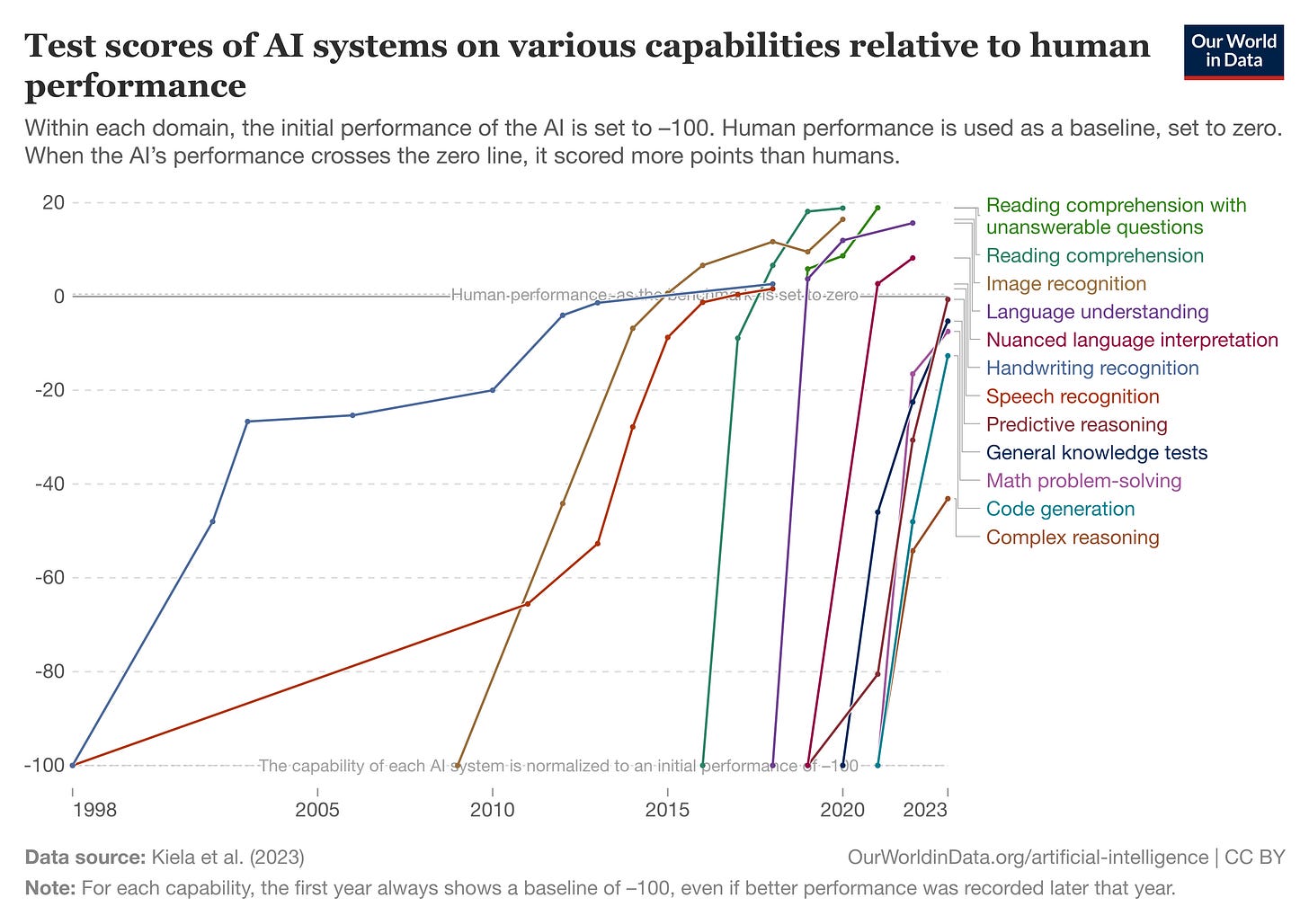

I love showing the above chart from Our World in Data, even though it ends in 2023. It illustrates how fast AI progressed across key domains, and how steep the curve became after the invention of the transformer in 2017.

That matches my experience. I remember playing with GPT-2, impressed it could sometimes write coherent articles. Then came GPT-3, which extended those abilities, but still couldn’t follow instructions, hold turn-by-turn conversations, use tools, or write code well. That was five years ago. If I had projected forward based on those limitations, and made a five-year plan, would I be set up for success?

Today, models hold long conversations in text and audio. They can search the web, summarize results, and build complex applications from a prompt. They’re starting to exceed humans at the hardest intellectual challenges (like outscoring all humans in a recent international coding contest). And that’s just language models. We can now also generate high-quality images, video, speech, music, and even simulated worlds.

The present isn’t like the past. But projecting forward at past rates of progress beats assuming static or slow change.

The future will not be like the present

Every major AI lab leader says essentially the same thing: models will keep improving at today’s pace or faster; within five to ten years we’ll have AI that can do any knowledge-work task; and superintelligence will soon follow, producing abundant wealth and health.

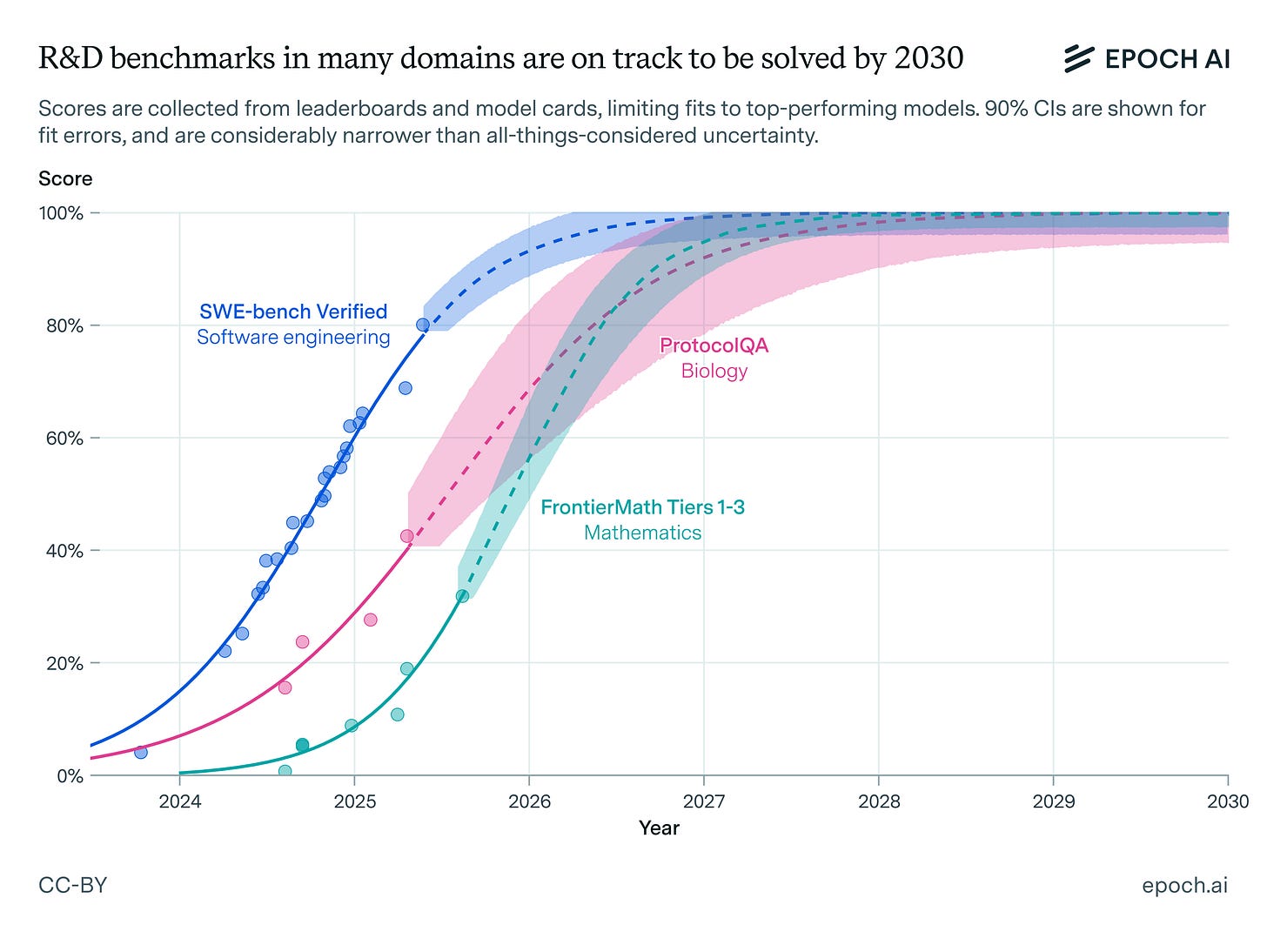

Of course, they have incentives to say this. But independent research groups echo the outlook. Epoch AI (source of the chart above), METR (focused on AI risk), and AI Futures Project (producer of the much discussed AI 2027) all forecast continued rapid progress.

Going back to our initial challenge with junior employees, this all means that in five years, neither junior nor senior employees will add much value relative to AI. Unless you believe progress will stall, which no reputable research group projects, you should prepare for AI to outperform humans at most computer-based work, and as robotics mature, physical tasks too.

This should reshape how you think about near-term deployments. Right now, for example, it’s easy to get caught in the chatbot paradigm of assistants or copilots. But as AI grows smarter, faster, cheaper, and more parallelizable than humans, you must plan for things that today sound like sci-fi. For example, AI Daily Brief host Nathaniel Whittemore has his “Doctor Strange Theory” of AI’s future where, like Doctor Strange exploring outcomes in different multiverses, employees will spin up dozens of AI agents to produce an output and then just pick the one they like best.

Thoughts on preparing for the unknown

We can’t know exactly what the future looks like. Too many variables. But we can say with confidence that, barring a major disruption, AI will progress at least as fast as today. What we don’t know is how society will absorb it.

Some ways to prepare:

Expect rapid progress. This is the safest assumption until reputable groups like Epoch AI say otherwise. Ignore pundits who trade on skepticism or media outlets pushing contrarian “debunks.” Trust sources grounded in data.

Scenario plan. We don’t know how this all unfolds, but we can explore scenarios with varying confidence levels. What if, within a year, you can spin up cloud agents capable of anything your best employees do? What would those employees then do? How should you structure your company? You don’t need certainty, but you do need to think across possible outcomes.

Maximize optionality. With progress fast and uncertain, keep your options open. Don’t get locked into one technology, vendor, or long-term plan. Especially avoid doubling down on tools likely to become obsolete.

As for junior employees, I don’t have a neat answer. But I doubt that answer lies in preparing them for a future unlikely to exist. Better to help them prepare for a world where they oversee swarms of agents that outperform them on any given task, but that they can harness for unprecedented impact. That seems more plausible.

But we should still keep our options open.