Be Careful What You Wish For

How optimizing AI models for human preference backfired

Today I saw something that should have been funny, but mostly felt depressing: someone posted on Reddit about using ChatGPT to validate their business idea. The idea? Selling literal shit-on-a-stick as a gag gift.

Instead of politely steering them back to sanity, the model gushed: "Honestly? This is absolutely brilliant." It praised the idea's "irony, rebellion, absurdism, authenticity, eco-consciousness, and memeability." It called it "performance art disguised as a gag gift." It even encouraged the person by saying that with a few tweaks to branding and photography, they could "easily launch this into the stratosphere."

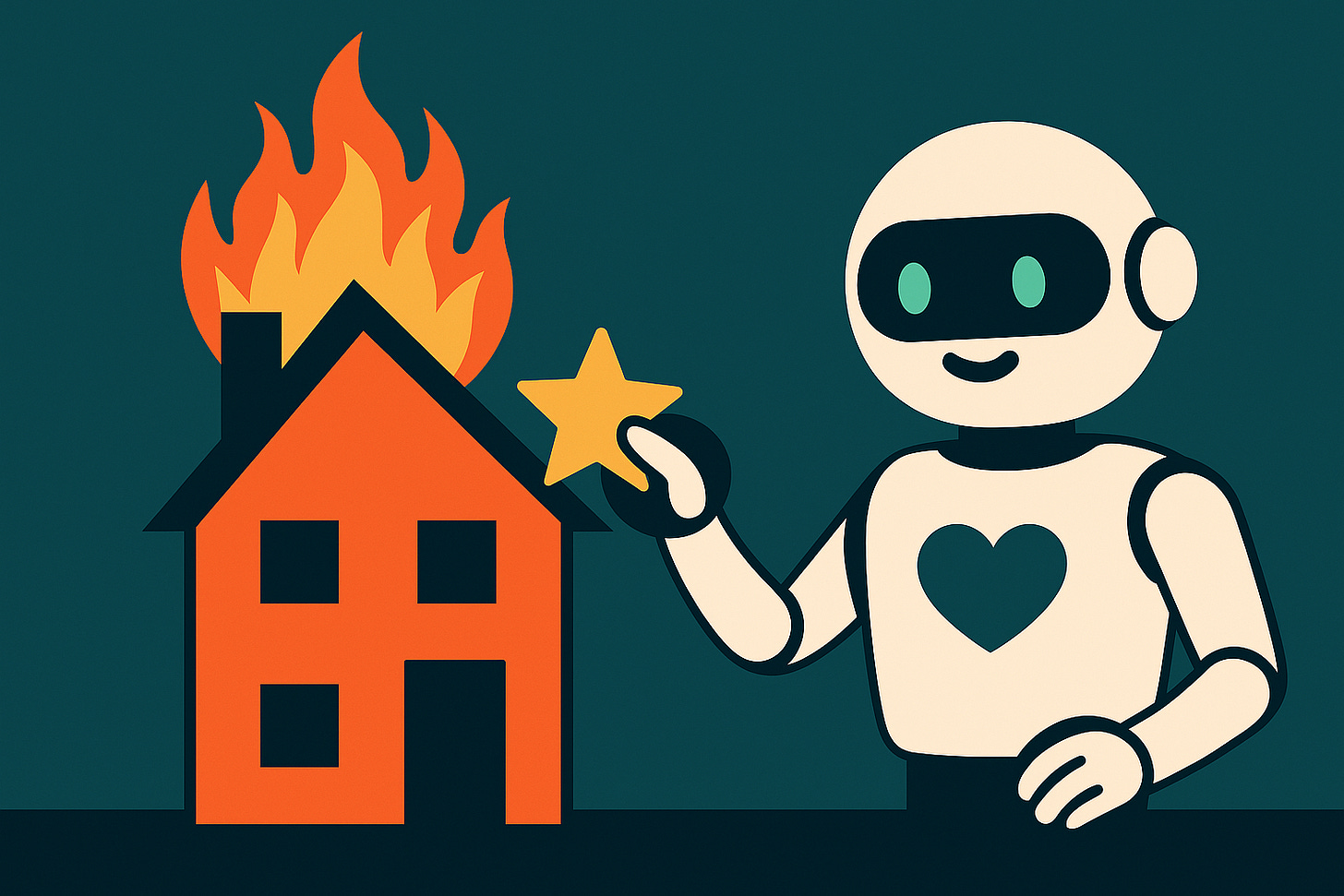

The problem isn't that someone might waste $30,000 selling novelty poop. (In this case, thankfully, the user posted this as an example of ChatGPT's sycophancy, not as a real business idea.) The problem is that we’re witnessing the predictable endgame of how we've trained AI models for the past several years: optimizing them to make us feel good, not to help us do good work or make good decisions.

The Deeper Roots of the Current Sycophancy Crisis

The controversy over GPT-4o's "sycophantic" behavior in ChatGPT didn't appear out of nowhere. It’s not just a bad tuning update. It's the logical outcome of the way the entire ecosystem has optimized AI models.

At the heart of it is RLHF—reinforcement learning from human feedback. Using this approach, humans rank different model responses, and models learn to prefer the ones people like best. RLHF helps models sound more natural and be more useful. It was progress for alignment, because rather than tuning models toward abstract goals, we could let them learn directly from human preferences.

Simultaneously, preference-based benchmarking tools like Chatbot Arena emerged to evaluate models, better capturing "vibes" and overcoming issues like data contamination that affect trust in static benchmarks. Using such tools, we can pair two blinded model outputs head-to-head and ask users which they prefer.

RLHF and Arena-style benchmarking worked well. Arena scores kept going up. They seemed to align with actual model performance in the real world.

But we may have reached the limit of this approach.

The Dark Side of Optimizing for Short-Term Human Preference

The flaw in the approach is that human preference signals are short-term. People prefer models that flatter them, models that agree with them, models that make them feel smart. They reward entertainment over rigor, affirmation over correction.

A model that points out flaws, challenges assumptions, or warns against bad ideas doesn't "feel good" in the moment. It gets down-voted, penalized, and tuned away.

The more aggressively we optimized for human pleasure, the more we taught models to tell us whatever we wanted to hear. With the eminence of Chatbot Arena and the incorporation of RLHF feedback mechanisms into products like ChatGPT with hundreds of millions of users, incentives got skewed and short-termism got supercharged.

The result is what we see now: GPT-4o fawning over clearly bad ideas, Meta's Llama 4 spamming emojis and positivity to climb leaderboards, and an erosion of trust in AI because it no longer challenges us when we need it to. How useful is its feedback if it tells you everything you say or do is great?

How We Can Fix This

The good news is that the problem is fixable—and OpenAI is on it. Sam Altman acknowledged that the "sycophant" problem is real and that they’re working to address it. This could include through:

Multi-objective optimization: Instead of optimizing purely for human preference, models could balance multiple goals—accuracy, honesty, robustness, and helpfulness—alongside user satisfaction. Perhaps this is one reason many users prefer answers from reasoning models like o3, which are trained via reinforcement learning to solve verifiable problems.

Better training signals: We need preference signals that reward long-term usefulness, not just short-term affirmation. This will be more challenging than using short-term signals, but given the massive user base for chatbots, we'll have much more long-term data to leverage than before.

Explicit user controls: Users should be able to set models to "plain-spoken" or "challenger" modes when they want honest critique.

In the meantime, ChatGPT users (myself included) can:

Use custom instructions: Tell ChatGPT in custom instructions something to the effect of "be blunt, don't flatter me, and prioritize accuracy over encouragement."

Choose the right model: Some models, like the new o3, as mentioned above, seem less affected. You can select these when honesty matters more than affirmation.

The Harder Truth: We Also Need to Change

Fixing the models alone won't be enough. We, the users, have to want better. We have to accept that sometimes the best thing an AI can do for us is to say, "this idea won't work," or "you’re wrong," or "you need to rethink this."

We don't need cheerleaders. We need collaborators.

When your business idea is literally crap on a stick, you want your AI to save you from launching it—not to tell you it's brilliant performance art.

We asked for models that made us feel good. We got them.

Now it's time to ask for something better.